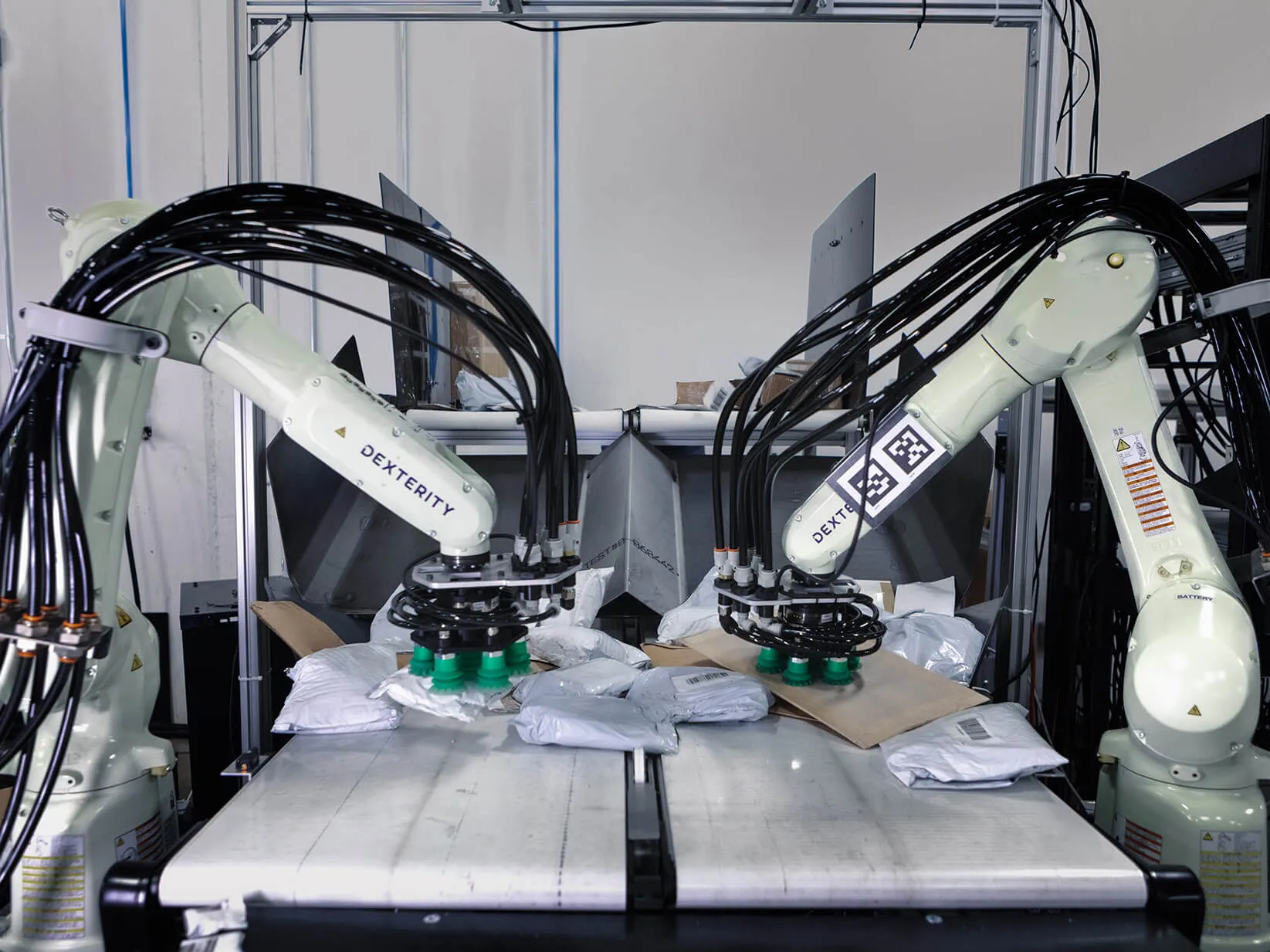

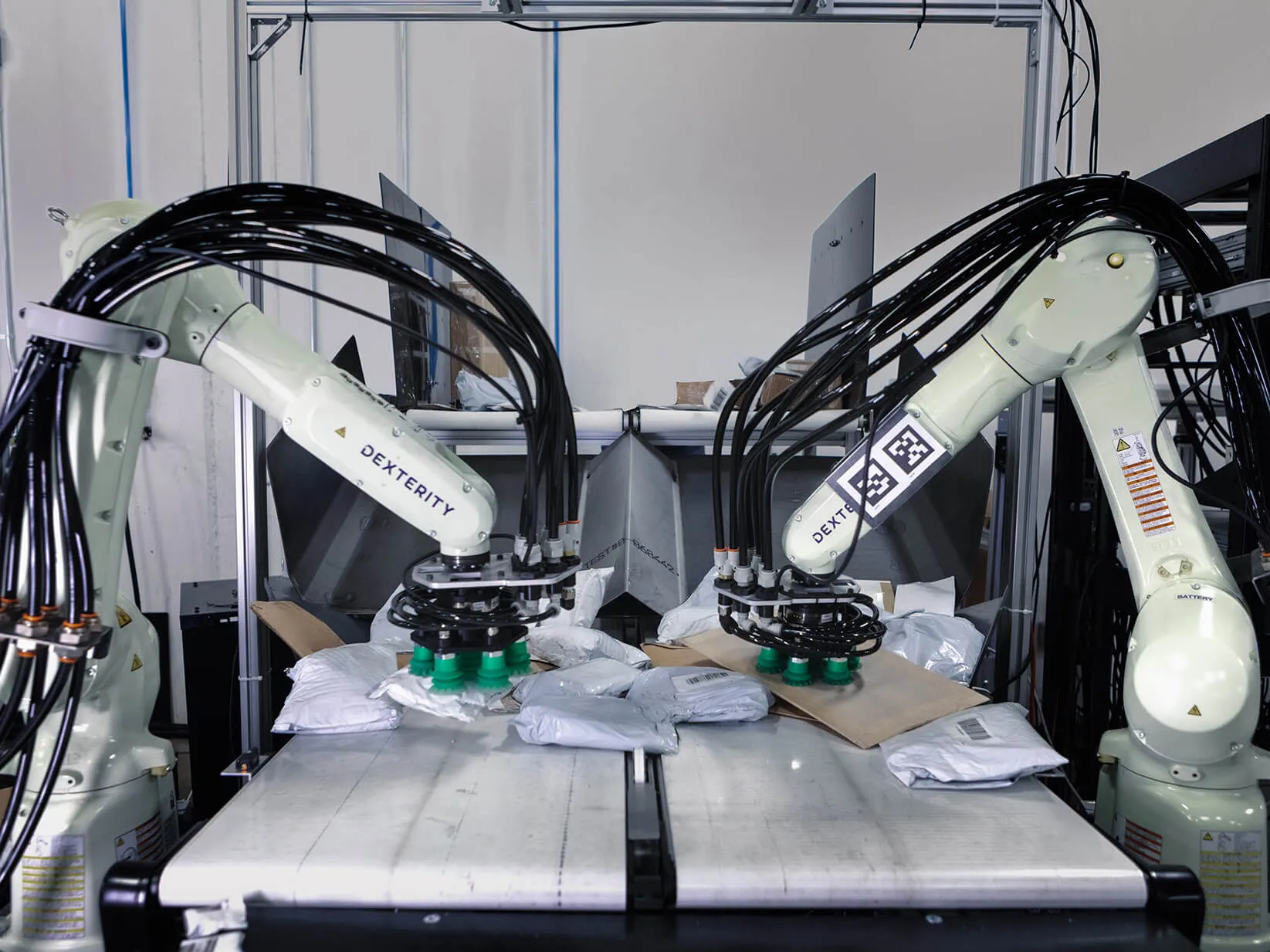

Dexterity's Platform: Powering Automation in Complex Environments

Most robots are optimized to perform a single action — welding a chassis, buffing an orthopedic hip joint, assembling a printed circuit. They repeat the same sequence of steps, again and again, with exacting precision. But in a modern warehouse where a mixed variety of goods need to be sorted, demands are always changing: One package might be a heavy box, the next a slim envelope, and the next a perforated crate of earthworms. And each might have a different destination — a conveyor belt, a stationary pallet, or a rolling cart. The parcels may arrive at random intervals and require varying amounts of time to transport. A robot specialized for one task quickly fails in this environment, because, as Dexterity’s founding engineer Robert Sun says, “you can’t hard-code in all these constraints and expect things to go exactly as planned.”

To succeed in complicated scenarios, a robot must be able to respond to its surroundings and change course when necessary. They also need to be easily programmed for new tasks — picking new types of objects or working in a modified workspace without needing months of re-engineering. Dexterity has built a suite of tools that fuses the raw capabilities of artificial intelligence (AI), computer vision, and force sensors, imbuing robots with the intelligence required to navigate a complex workplace. Just as a single brain governs a human’s limbs and sensory systems, Dexterity’s robot fleet — whether they are working together on one task or distributed across various tasks in a warehouse — are controlled by this common platform.

The Dexterity platform is dispersed across several layers of software, each performing a specific role: from the low-level instructions that tell each joint in a robot to move to a particular position, to logic that encodes more abstract tasks like “grab package,” and on to the highest level, which coordinates all the robots in the warehouse. Because only the first, bottom layer of instructions talks directly to the robot, Dexterity’s team designs commands that plug into the existing software easily without having to wrestle with more complex hardware-level language.

Together, these high-level commands represent a library of reusable functionalities that developers can shuffle to create new applications on demand. Guided by this common software platform, Dexterity robots excel in messy, unpredictable situations where traditional automation fails.

To create a system that responds to variable conditions with near-human-like precision, Dexterity instills its robots with a sense of the physical world. Cameras and sensors send signals to the software to interpret, completing what is in essence a perception system through which the robots monitor their environment. Algorithms and AI models analyze depth and color information from the cameras to zero in on a single package in a cluttered stack. The system delineates the boundaries between, say, a poster tube, a pair of jeans packed in a grocery bag, and a soggy cake box — all of which Dexterity robots have encountered in customers’ warehouses.

The robot must then plan a unique strategy to move each package. Dexterity’s platform uses force control — essentially a robot’s sense of touch — to observe how each package behaves.

When a Dexterity robot manipulates an object, pressure sensors near its grippers send feedback to the server, allowing the AI software to deduce a number of the object’s traits. If an object is pliable, resistance increases as the robot applies pressure; contact with a more rigid object will register as a sudden impact. The system can also determine the weight of a package: Heavier objects tug on the sensors and are more resistant to being moved. The control system then runs algorithms to determine the best approach to handle each parcel: how tightly to grip, how fast to lift, how gently to place.

“Force control gives that extra level of human touch,” Sun explains. “If a person tries to put a book into a slot in a bookshelf, without a sense of touch, you'd be hitting all the books around it. Instead, you feel the books next to the slot. ‘I hit this edge. I’m going to move a little bit to the right a little bit.’ That sense of touch is also required for a robot to pack boxes tightly and gently without destroying the item it’s holding. Force control enables that last level of precision.”

Dexterity robots can build on that sense of touch to perform an even more complex feat: collaboration. If a human can’t lift an object alone, he calls a friend to help. But in robotics, that kind of cooperation has proven very difficult. Two independent robots have to communicate quickly and continuously to lift a load without smashing or toppling it.

Because Dexterity engineers had already developed the perception system, they were able to teach the robots to react in real time to a teammate’s efforts. Now, in customers’ warehouses around the world if a box is too heavy for one Dexterity robot, another will come to help. The robots can also collaborate with human workers. “It was about taking that sense of touch and projecting to the next level,” Sun says. “Now, when the robot senses force that someone else is applying, it can react to it.”

This capability gives the system unparalleled flexibility. Rather than installing a dedicated arm for heavy loads, customers can invest in a versatile team of robots that cooperate or work independently, depending on the demands at that moment.

.webp)